Supercomputing Predictions: Custom CPUs, CXL3.0, and Petalith Architectures

This post started out as a reaction to attending SC22 after about 20 years away from the HPC mainstream. I had a version of this published as a story at InsideHPC on December 1st. In early January a related paper was published by Satoshi Matsuoka et. al. on Myths and Legends of High Performance Computing — it’s a somewhat light-hearted look at some of the same issues by the leader of the team that built the Fugaku system I mention below. Luckily I think we are in general agreement on the main points.

Here’s some predictions I’m making:

Jack Dongarra’s efforts to highlight the low efficiency of the HPCG benchmark as an issue will influence the next generation of supercomputer architectures to optimize for sparse matrix computations.

Custom HPC optimized CPUs and GPUs will become the mainstream in the next few years, with chiplets based on the ARM and RISC-V pattern libraries and tooling, with competition between cloud providers, startups and the large systems vendors.

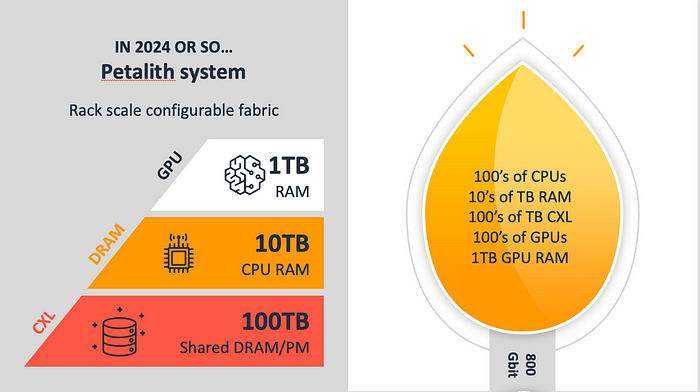

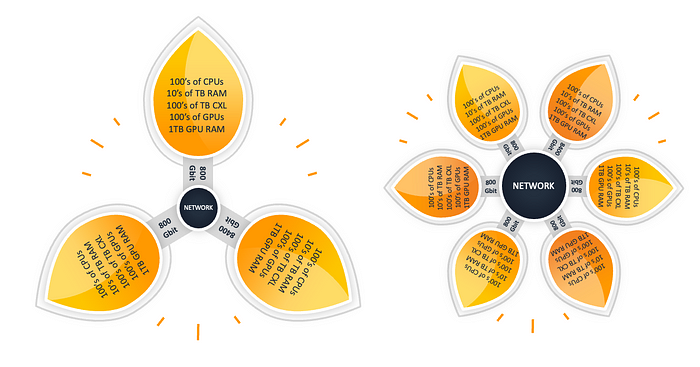

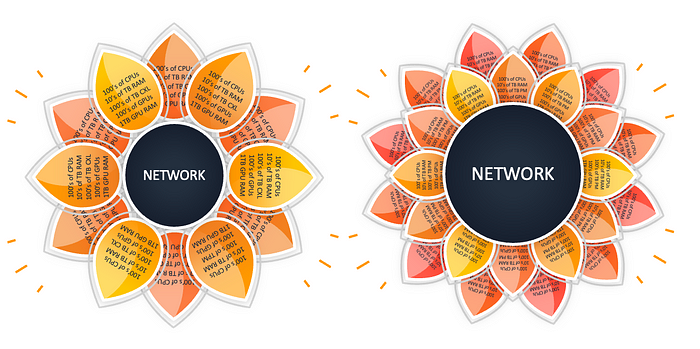

Next generation architectures will use CXL3.0 switches to connect processing nodes, pooled memory and I/O resources into very large coherent fabrics within a rack, and use Ethernet between racks. I call this a Petalith architecture, and it will replace Infiniband and other specialized interconnects inside the rack.

Clouds using Ethernet that are multipath optimized using libfabric and features like EFA on AWS are going to be increasingly competitive, and Ethernet will replace other interconnects between racks.

This story starts over twenty years ago, when I was a Distinguished Engineer at Sun Microsystems and Shahin Khan asked me to be the Chief Architect for the High Performance Technical Computing team he was running. When I retired from my Amazon VP position earlier this year and was looking for a way to support a consulting, analyst and advisory role, I reached out to Shahin again, and joined him at OrionX.net. Part of the reason is that I’ve always been interested in HPC, but haven’t had the opportunity to focus on it in the intervening years.

So this November Shahin and I went to SC22 in Dallas TX together, as analysts, and started out in the media briefing event where the latest Top500 Report was revealed and discussed. Jack Dongarra talked about the scores, and pointed out the low efficiency on some important workloads. In the discussion, there was quite a lot of analysis provided, but no mention of interconnect trends, so I asked whether the fix for efficiency needed better interconnects, and got the answer yes, and that they had some slides on interconnect trends but hadn’t included them in the deck. The next day Jack presented his Turing Award Lecture as the keynote for the event (HPCwire has a good summary of the whole talk), and later in the the week he was on a panel discussion on “Reinventing HPC” where he repeated the point. Here are two of his slides:

The Top500 Linpack results are now led by the Oak Ridge National Labs HP/Cray Frontier system at over an exaflop for 64bit floating point dense matrix factorization, but there are many important workloads represented by the HPCG conjugate gradient benchmark, where Frontier only gets 14.1 petaflops, which is 0.8% of peak capacity. HPCG is led by Japan’s RIKEN Fugaku system at 16 petaflops, which is 3% of it’s peak capacity. This is almost four times better on a relative basis, but even so, it’s clear that most of the machine’s compute resources are idle. In comparison, for Linpack Frontier operates at 68% of peak capacity. Most of the top supercomputers are similar to Frontier, they use AMD or Intel CPUs, with GPU accelerators, and Cray Slingshot or Infiniband networks in a Dragonfly+ configuration. Fugaku is very different, it uses an ARM CPU with a vector processor, connected via a 6D torus. The vector processor avoids some memory bottlenecks, and the interconnect helps as well. Over time we are seeing more applications with more complex physics and simulation characteristics so HPCG is becoming more relevant as a benchmark.

We spent most of the conference walking around the expo and talking to people, rather than attending technical sessions, and asked several people why other vendors weren’t copying Fugaku, and what improvements in architecture were on the horizon. Some people said that they had tried pitching that kind of architecture, but customers wanted the Frontier style systems. Some people see the choice as between specialized and more off the shelf technology, and that leveraging the greater investment in off the shelf components was the way to go in the long term. In recent years GPU development that was originally funded by the gaming market (and ended up being used as a supercomputer accelerator) has been dominated by use in the AI/ML accelerator market. However the latest AI/ML optimized GPUs are reducing support for 64bit floating point in favor of 8, 16, and 32bit options that provide better performance for training and inference workloads. This is a worrying trend for the HPC supercomputer market, and while a new HPL-MxP mixed-precision benchmark shows that it’s possible to leverage lower precision floating point, there’s a lot of existing code that needs 64bit.

During the Reinventing HPC panel session, there was some discussion of the cost of designing custom processors for HPC, and a statement that it was too high, in the hundreds of millions of dollars. However Shahin and I think this cost is more likely to be in the $20–40M range. Startups like SiPearl and Esperanto are bringing custom chips to market without raising hundreds of millions of dollars. There is a trend in the industry where Apple, Amazon, Google and others are now designing their own CPUs and GPUs, and NVIDIA has added the ARM based Grace CPU to its Hopper GPU in its latest generation. The emergence of chiplet technology also allows higher performance and integration without having to design every chip from scratch. If we extrapolate this trend, it seems likely that the cost of creating a custom HPC oriented CPU/GPU chiplet design — with extra focus on 64bit floating point and memory bandwith, or whatever is needed, at relatively low volume, is going to come into reach. To me this positions Fugaku as the first of a new mainstream, rather than a special purpose system.

I have always been particularly interested in the interconnects and protocols used to create clusters, and the latency and bandwidth of the various offerings that are available. I presented a keynote for Sun at Supercomputing 2003 in Phoenix Arizona and included the slide shown below.

I will come back to this diagram and update it to today later in this story, but the biggest difference is going to be bandwidth increases, as latency hasn't improved much over the years. The four categories still make sense: kernel managed network sockets, user mode message passing libraries, coherent memory interfaces, and on-chip communication.

If we look at Ethernet to start with, in the last 20 years we’ve moved from the era of 1Gbit being common and 10Gbit being the best available to 100Gbit being common, with many options at 200Gbit, 400Gbit and and a few at 800GBit. The latency of Ethernet has been optimized — The HP/Cray Slingshot used in Frontier is a heavily customized 200Gbit Ethernet based interconnect, with both single digit microseconds minimum latency, and reduced long tail latency on congested fabrics. AWS has invested in optimizing Ethernet for HPC via the Elastic Fabric Adaptor (EFA) option, and the libfabric library. While the minimum latency is around 15–20us (which is far better than standard Ethernet), they deliver packets as a swarm across multiple paths out of order using their Scalable Reliable Datagram (SRD) protocol and maintain a low tail latency for real world workloads. AWS over-provisions its network to handle a wide variety of multi-tenant workloads, so there tend to be more routes between nodes than in a typical enterprise datacenter, and AWS supports HPC cluster oriented topologies. Many HPC workloads synchronize work on a barrier, and work much better if there’s a consistently narrow latency distribution without a long tail. Most other interconnects operate in-order on a single path and if anything delays or loses one packet, the whole message is delayed. So while EFA has higher minimum latency for small transfers, it often has lower and more consistent maximum latency for larger transfers than other approaches.

Twenty years ago there was a variety of commercial interconnects like Myrinet and several Infiniband vendors and chipsets, over the years they consolidated down to Mellanox which is now owned by NVIDIA, and OmniPath which was sold to Cornelis by Intel. Part of the attraction of Infiniband is that it’s accessed from a user mode library like MPI, rather than a kernel call. The libfabric interface brings this to Ethernet, but there is still a latency advantage to Infiniband, which is around 1 microsecond minimum latency end to end on current systems. The minimum latency hasn’t changed much over 20 years, but Infiniband runs at 400Gbits/s nowadays and the extra bandwidth means that latency for large amounts of data has greatly reduced. However the physical layer is the same nowadays for Infiniband and Ethernet, so the difference is in the protocol and the way switches are implemented.

The most interesting new development this year is that the industry has consolidated several different next generation interconnect standards around Compute Express Link — CXL, and the CXL3.0 spec was released in mid-2022.

CXL3.0 doubles the speed and adds a lot of features to the existing CXL2.0 spec, which is starting to turn up as working silicon like this 16 port CXL2.0 switch from XConn shown in the Expo. Two of each of the small plugs makes up a connection, and the two large edge connectors at the top are to take memory or other CXL cards.

CXL is a memory protocol, as shown in the third block in my diagram from 2003, and provides cache coherent latency around 200ns, and up to 2 meters maximum distance. This is just about enough to cable together systems within a rack into a single CXL3.0 fabric. It has wide industry support, CXL3.0 is going to be built into future CPU designs from ARM, and earlier versions of CXL are integrated into upcoming CPUs from Intel and others. The physical layer and connector spec for CXL3.0 is the same as PCIe 6.0, and in many cases the same silicon will talk both protocols, so that the same interface can be used to connect conventional PCIe I/O devices, or can use CXL to talk to I/O devices and pooled or shared memory banks.

The capacity of each CXL3.0 port is x16 bits wide at 64GTs which works out at 128GBytes/s in each direction, total 256GBytes/s. CPUs can be expected to have two or four ports. Each transfer is a 256byte flow control unit (FLIT) that contains some error check and header information, and over 200 bytes of data.

The way CXL2.0 can be used is to have pools of shared memory and I/O devices behind a switch, then to allocate capacity as needed from the pool. With CXL3.0 the memory can also be setup as coherent shared memory and used to communicate between CPUs. It also allows switches to be cascaded.

I updated my latency vs. bandwith diagram and included NVIDIA’s NVLINK interconnect that they use to connect GPUs together, it’s 300–900GBytes/s but they don’t specify minimum latency, so I assume it’s similar to Infiniband. It’s not a cache coherent memory protocol like CXL, and it’s optimized to run over a shorter distance, mostly within a single chassis.

Comparing CXL3.0 to Ethernet, Slingshot and Infiniband, it’s lower latency, and higher bandwidth, but limited to connections inside a rack. The way the current Slingshot networks are setup in Dragonfly+ configurations is that a group of CPU/GPU nodes in a rack are fully connected via switch groups, and there are relatively fewer connections between between switch groups. A possible CXL3.0 architecture could use it to replace the copper wired local connections, and use Ethernet for the longer distance fiber optic connections between racks. Unlike Dragonfly, where the local clusters have a fixed configuration of memory, CPU, GPU and connections, the flexibility of a CXL3.0 fabric allows the cluster to be configured with the right balance of each for a specific workload. I’ve been calling this architecture Petalith. Each rack-scale fabric is a much larger “petal” that contains hundreds of CPUs and GPUs, tens to hundreds of terabytes of memory, and a cluster of racks connected by many 800Gbit Ethernet links could contain a petabyte of RAM.

When I asked some CXL experts whether they saw CXL3.0 replacing local interconnects within a rack, they agreed that this was part of the plan. However when I asked them what the programming model would be, is it message passing with MPI, or shared memory with OpenMP, they didn’t have an answer and it appears that topic hadn’t been discussed. There are several different programming model approaches I can think of, and I think it’s also worth looking at the Twizzler memory oriented operating system work taking place at UC Santa Cruz. This topic needs further research and additional blog posts!

This should all play out over the next 2–3 years, I expect roadmap level architectural concepts along these lines at SC23, first prototypes with CXL3.0 silicon at SC24, and hopefully some interesting HPCG results at SC25. I’ll be there to see what happens, and to see if Petalith catches on…